A few years ago, like many others around the world, I read Yuval Harari’s Sapiens book. It is a fascinating look at human history, from the perspective of anthropology, sociology and economics. Also, as many others in the computer science field, I have been thinking and working with what Andrej Karpathy dubbed “Software 2.0”, a bold and pragmatic view of how machine learning is transforming our industry. Sipping from ideas of these writings and my own experience, I decided to write about Knowledge 4.0, an idea that has been in my mind for a while.

Intelligence has always been somewhat ill defined. In the midst of the current deep learning explosion, many have once again tried to define what generalized artificial intelligence is, as Turing’s Test started to feel irrelevant. Critics say this is just part of the artificial intelligence hype cycle, whenever we reach a target, we redefine the problem.

As someone who has spent a lot of my adult lifetime thinking about the representation of information, my mind usually wanders through a more humble concept, that of knowledge. Whereas intelligence revolves around some process of creating or manipulating ideas, knowledge is usually seen in a much more static fashion. A snapshot of the world, or some simplification of it, but ultimately a useful representation of a state of things. The product of the application of (some sort of) intelligence.

As humans, our ability to create and access knowledge has changed meaningfully no more than a handful of times, but always with vast consequences to our societies.

In our early nomadic days, we lived as hunter-gatherers, and the amount of knowledge each group of humans possessed was rather limited. Each individual only knew what he had seen or felt through his other senses. At best, one acquired limited knowledge indirectly from observation of its physically colocated peers. In fact, in its book Harari claims that humans at that time had bigger brains and were more “intelligent”. It is no surprise. Beyond some innate traits from our genes, the knowledge our ancestors needed to survive had to be recreated constantly through sensorial processing in hostile and constantly changing environments. Whereas Sapiens looks at us through the lenses of work and societies, the ideas we develop here are all contained in the world of culture.

The first revolution came when a fantastic and rarely recognized technology was invented: speech. The ability to communicate is innate to us, and can be observed in many other species, including not only primates but birds and even more primitive animals. A gorilla can master many symbols through its hands. But modulating sound through our digestive apparels to convey a large set of phonemes and combining them to encode vast amounts of distinct symbols is a technological invention of our society. One that took some brilliant individuals to bootstrap certainly, as there is no evidence it has been developed more than once, and in fact several feral kids have been observed in that regard, and speech has never seen developing in fully isolated societies.

Speech was so utterly successful that it has been adopted by virtually a hundred percent of the population, an adoption rate probably higher than any other technology ever created in history. The creation of knowledge did not change much yet, one still had to rely on its sensorial experiences to capture the world, and use its intelligence to compress that input in a representation that fitted its brain, and now that could be expressed in words, so it could be passed along. But the transmission of knowledge now flew through this funny channel, waves in the air, captured by little bones on the side of our heads, and mapped to abstract symbols our brains agreed upon convention as we were taught our local languages.

With languages, we could create stories. Small, compressed representations of aspects of the world, that we access to know how to react to situations we had never experienced directly and master nature. As our pool of knowledge grew, we tamed agriculture and settled. We created rhymed poetry, a funny trick to decrease entropy in speech, making memorization easier and stories more noise-resistant. As we now knew more, and required less intelligence to survive, our brains shrank.

As knowledge storage and transmission media, the combination of speech and memory was still limited. To access knowledge one had to be physically colocated with another individual already possessing the knowledge, and that individual had, of course, to be alive. Time had to be spent both by the speaker and by the listener as well. And if someone or some group which exclusively possessed some knowledge died, then the knowledge was gone. It could only be recreated through sensorial experience and intelligence. It took hundreds of thousands of years for the next leap, written speech.

For the first time, knowledge had a general physical version, that we could touch with our hands, move around, share with friends. Knowledge, once encoded in a book, can be decoded by many people. With an enormous increase in the payoff of the encoding investment, the world was transformed. Shared stories had much more reach, and our culture could spread across much larger groups. Accidental tampering of data became much less of an issue. When the manuscripts of the dead sea were discovered, we could verify that over thousands of years, little of the Torah had changed, a property impossible to hold through oral history alone. The invention of the press in the 14th century super-charged the properties of written media, and ignited a series of revolutions that brought us to the modern world.

Thanks to writing, we went from the village level to the nation-state level. Ancillary developments, like the TV brought us to a global culture. The TV with its cameras may at a first glance seem revolutionary by itself, after all it captures a much more raw form of sensorial input. However, one does not efficiently access knowledge though a raw camera feed, and what we watch on TV are scripted shows, which goes through the same process of one-time compression through intelligence into story-telling, so that knowledge can be transmitted through the air and decoded in parallel by its viewers. A richer media, but ultimately of the same nature of written speech. I used to joke that God gave us this miracle but restricted it to a handful of channels such that all of us would need to buy cable to pay for our sins.

Jokes aside, up to this point, we were pretty much ruled by Shannon’s law. What we call work essentially was using intelligence and muscles to put things into that knowledge pool and taking stuff from there for real world application. The one place we could bypass the choke point of our brains was the custom tools we built, which encoded knowledge in a repeatable, readily-available form. Once a car is built, you can just drive it. You no longer need to access the knowledge pool to figure out how to build a new car every time you want to travel around. But unlike the knowledge pool, where ideas were freely composed and accumulated in a large collective pool, machines were clumsy in that regard.

The third revolution is much more recent, and its impact has been equally transformative. Whereas the transformations in knowledge we have discussed so far have been on how to encode and transmit it, the third revolution happened on the decoding and application side. We invented software, or how we soon learned, thanks to Alan Turing, a universal machine. Something that could compute any computable result. Creation of knowledge is still the same complex process that requires intelligence. A computer scientist still needs to observe the world and compress it into useful abstractions that fit in her brain, and she will later encode that as computer instructions, instead of words. But software no longer requires decoding, and composes nicely, even better than the knowledge representations we had so far. Software is a configuration of a universal machine, and dispenses the limitations of the special purpose machines we had built so far. To use software, we do not need to understand it, we do not need to read it and we are no longer constrained by the role of our brains in Shannon’s law.

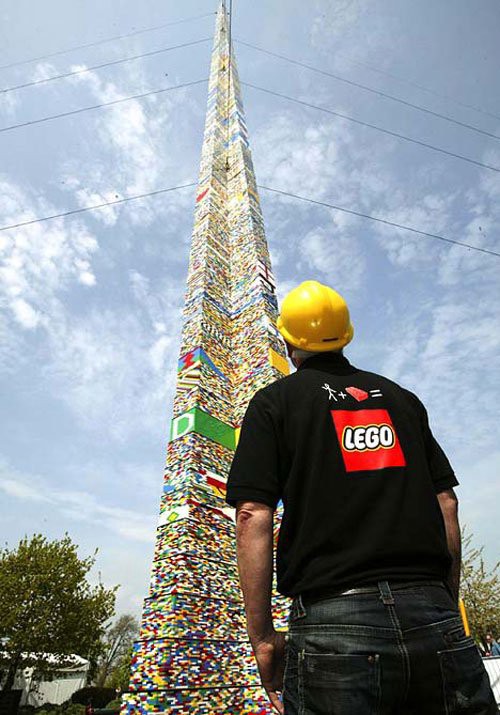

A young Richard Stallman ignited a revolution within the revolution when he understood the composition power of software, and created the GPL, a legalese powered ancient blockchain of sorts, creating the largest collective intellectual work ever built by humankind. It was readily available, and free even beyond the beer and freedom meanings often discussed. It was free from us, its creators. Sensorial information and the procedures we derived from that to act on the world in our interest were compressed into lego blocks that could be assembled to create more and more complex tools. Although Stallman’s vision became fractured as the internet emerged and siloed software, the power of software composition was far from halted.

Some of the most revered contemporary art museums installations are powerful exercises in semiotics. I remember going through the corridors of Shanghai’s MoCA, and viewing the spectacular Shan Shui Within exposition, where artists tried to capture the evolution of traditional Chinese culture as it faces our modern era. As I observed the exposition, my mind started to wander, dreaming about a large installation that goes through all the knowledge pieces that goes into creating a smartphone. Despite being ubiquitous, smartphones are an unparalleled feat of engineering, and sometimes we forget how much of a revolution they represent. The amount of man-power that goes into each of these machines is worth trillions of dollars. They are the embodiment of knowledge captured over many generations and geographies, and encoded as software, readily available for our use. There is no individual that could in a lifetime read a mere fraction of the books that contain the knowledge necessary to build a smartphone. Before the invention of software and the computers that execute it, we simply lacked the tools for building something like that.

Since the third revolution of knowledge, anything new we discover about the world, any new procedure, any new observation, can be captured in software, and stay forever readily available. Once again, part of our brains can shrink, since our society no longer requires the difficult task of decoding information from the knowledge pool to apply it. It is readily available, and if the Idiocracy movie comes true, as long as they still have computers, they could still access the most modern algorithms to act upon the world, without having any idea of how they work.

The fourth revolution is in motion now, and we are just seeing it start. This time it is happening in the encoding side of our knowledge machine. Speech allowed us to transmit knowledge among humans, the written word enabled us to broadcast it across generations, and software removed the cost of accessing that knowledge, while turbocharging our ability of composing any piece of knowledge we created with the existing humanity-level pool. What we call now machine learning came to remove one of the few remaining costs in our quest of conquering the world: creating knowledge. It is not that workers will lose their jobs in the near future, this is the revolution that will make obsolete much of our intellectual activity for understanding the world. We will be able to craft planes without ever understanding why birds can fly.

Polanyi’s Paradox explores the idea that we are capable of much more than we are able to explain. Even though we are capable of consuming information now through written text, augmented sensory abilities, from microscopes to dark energy telescopes, for that knowledge to be captured, it still needs to go through our brains and leave it in compressed form, probably first as the excited words of a scientist, then latex equations and ultimately code flowing from the fingers of a programmer. This is an expensive, lossy process. For example, the periodic table is nothing but a lossy, very clever low dimensional representation of the complex reality of the atomic world. The process of synthesizing knowledge this way is what we call “intelligence” at action.

But what if you can bypass the paradox altogether by removing ourselves from that pipeline? What if we feed the microscope image directly to our computers and let them register the knowledge directly? Raw recording of data doesn’t sound much of a revolution, but what if we could register just the bits that matter for later leveraging that knowledge? Isn’t that what the scientist does when she looks for the most elegant equation which can describe hundreds of data points with a few symbols? Maybe our taste for symmetries and patterns is just the result of evolution favoring those who learn faster and remember cheaply.

The ability of discovering useful representations of knowledge automatically is the fourth revolution. The techniques for that had been brewing for a long time under the machine learning umbrella, but it was only with the advancements from the last decade that learning feature representations became a central part of the discipline, in many ways eclipsing the more intelligence-like parts of decision making. In the world of “deep learning”, compressing, learning, knowing, storing, teaching, decompressing, those all look the same.

It is on top of this idea, of a noisy encoder-decoder, that we start to see breakthroughs in translation software. The impressive result of word2vec took the world by storm, the first large-scale knowledge embedding, the result of pointing our “telescope” to all of the written books and asking the computers to predict the next word in each phrase. To be up for that task, computers had no choice but to learn the underlying structure of language. They learned that queens were to kings as mothers were to fathers. Beyond structure, they amassed geography and culture (including its prejudices) indirectly. They learned that apples are more popular than jabuticabas among english speakers, and that we all seem to believe that all princes are blue-eyed europeans. GPT-3, the latest incarnation of this idea can write poems better than myself.

But who was teaching that to the computer? It was itself, and in machine learning lingo, we started to call it a self-supervised algorithm, a terminology consolidated by a recent popular article by Yann Le Cun. I would rather take a narrower view of what is going on here. The word2vec embedding was created as the computers tried to learn the only function for which there is abundant labeled information: the identity function. Simply put, the identity function generates copies of something. Given 𝑥, it tries to output 𝑥 as faithfully as it can.

The deep neural networks we are all working with now are capable of memorizing anything we feed to them. Assessing the error of the identity function is still not trivial in all domains, but it certainly is in finite discrete spaces like NLP, and we are on the verge of seeing that capability expand. And through the combination of the identity function to provide an infinite stream of labels, and neural networks for encoding embeddings, together with a well designed choke point, we have a universal compressor generator, which may be able to capture everything in the world in bits and bytes.

As we master Software 2.0, we will become more and more capable of feeding to the common pool of models and embedding layers and leverage it to whichever tasks we want. Anything in the realms of language processing, speech recognition or translation has been operating in this mode for a few years now. Image algorithms are incredibly close, and we are now seeing areas that were dominated by combinatorial algorithms, like logistics, going in the same direction.

This is knowledge 4.0. It only needs sensors and computing power to be generated. We may think of it as incredibly sophisticated cameras. Take enough pictures of the sky across the world, and a thousand years from now someone may be able to say how many millimeters it likely rained in a rural area of California on any day of the twenty-first century. Inject enough people with them to monitor blood pressure and composition, cross the models and we can say what is the heart attack probability for any population that lives in sunny areas. These applications are not unlike the colorization of old pictures or the upscaling of hundred year old videos from Paris or New York which have baffled so many in Youtube. Given 𝑥, produces 𝑥 a century afterwards, no matter how crappy your sensors to capture 𝑥 were. Modern phones already do machine learning adjustments just to compensate for cheap lenses.

Today medicine is seen as a complex subject, with lots of fragmented information across a vast array of sub-fields, some relatively well understood, like those dealing with the body mechanics, others not so much, as our internal chemistry, and many still very primitive, as those dealing with our mind. It may be that the human body is so complex that we may never be able to reduce it to something we can understand. But with knowledge 4.0, we won’t need it, and from a pragmatic perspective, doctors won’t stand a chance. Learned embeddings targeting the identity function will be inscrutable, but when used to feed more targeted models will allow for vastly better diagnostics and treatments. The computer will become a master of any field, but there is a good chance in many fields it won’t be able to explain to us what it learned. As Wittgenstein put it, if a lion could speak, we could not understand it.

As computing power increases, and we deploy sensors everywhere, there will be nothing left to learn from primitive instruments like our eyes and ears. Even though these are supported by our incredibly powerful brains, ultimately knowledge accumulation is capped by our ability to talk, write papers or programs. Neural networks are inspired by how our brains work, but we know they are far apart, and in fact we are far from knowing how brains work. Regardless, we have not yet discovered telepathy, so brains can only exchange information among themselves very slowly and indirectly through genes or through the media we know, a rather saturated channel with all its memes. It may be that despite being dumber, computers will benefit from the faster model of knowledge composition through a hive-mind of embeddings and models. This jump is so big that for practical purposes we may say we have escaped Shannon’s law.

So far we have been talking about knowledge 4.0 restricted to the role of encoding data from sensors. For that, the identity function enables the learning of everything that has already happened, but that is just the start. With the techniques from reinforcement learning we can also reason about simulated worlds, and connect far apart events as part of our learning process. Our computers can dream and experience a million thousand lives to sense everything and master any subject.

Take the work of Deep Mind on Chess, Shogi, Go and Starcraft II with its AlphaZero learning algorithm. Given a generative engine able to contain the dreams to the rules of the game, the computer just played itself several times, generating its own labeled data. When it played a human, it easily outperformed it because it had experienced so many similar scenarios that it had taught itself how to behave to win. Had the games with humans been played with different rules, never seen by the machine before but well known to the humans, the machine would likely have been crushed. But within a controlled simulation, everything is self-supervised, and if the rules of the simulation transfer to reality, the machine will be unbeatable.

Also, in simple prediction as enabled by the identity function we discussed before, the path to input reconstruction is often something we humans can relate to. It is just filling in details from memory and common sense. But when AlphaZero was learning to play Chess at a superhuman level, it was never constrained by common sense — “It doesn’t play like a human, and it doesn’t play like a program,” said its creator Demmis Hassabis, completing: “it plays in a third, almost alien, way” — .

Reinforcement learning backpropagates knowledge across far apart events making not only knowledge representation inscrutable, but any intermediate actions derived from it equally cryptic. High level Go players often have trouble describing why a given move is good, but when AlphaZero is playing Polanyi’s paradox effect is in a different league altogether. For it to explain to us why it played a given move is not unlike the human player explaining its move to a cat. Yes, there is a whole field of research on explainable models, but ultimately there is a trade-off between being able to explain intellectual activities to humans and performing them at superhuman level.

The knowledge 4.0 revolution allows us to freeze a frame of the world simply by training for the identity function. That alone is extremely powerful, as the future tends to resemble the past, but it goes beyond that by allowing us to apply the same technique to every imaginary world we can describe and then mimic complex series of actions it discovers to be successful on those worlds. There is no need to drive millions of hours and crash thousands of cars in the real world to teach a computer to drive. With knowledge 4.0, we can add an incredible version of São Paulo to GTA, and let the computer learn how to drive there. Yes, the physics engine won’t be as perfect as reality, but it might be close enough, and it will get better every year. We are seeing this playing out right now as the large technology companies mix maps services with deployed sensors in existing vehicles to train models mixing observation, simulation and prediction as they rush to build self-driving cars.

I guess this is what our task is now. Throwing sensors everywhere, so machines become our eyes, and playing “what if” games with them just to see they master each of those make-believe setups. Human thinking won’t be abolished, after all the former is a rather challenging engineering task, and the latter a deep intellectual one. But the why — well, that word is gone.